Ingestion Aggregations

Aggregation Config

{

"tableConfig": {

"tableName": "...",

"ingestionConfig": {

"aggregationConfigs": [{

"columnName": "aggregatedFieldName",

"aggregationFunction": "<aggregationFunction>(<originalFieldName>)"

}]

}

}

}Requirements

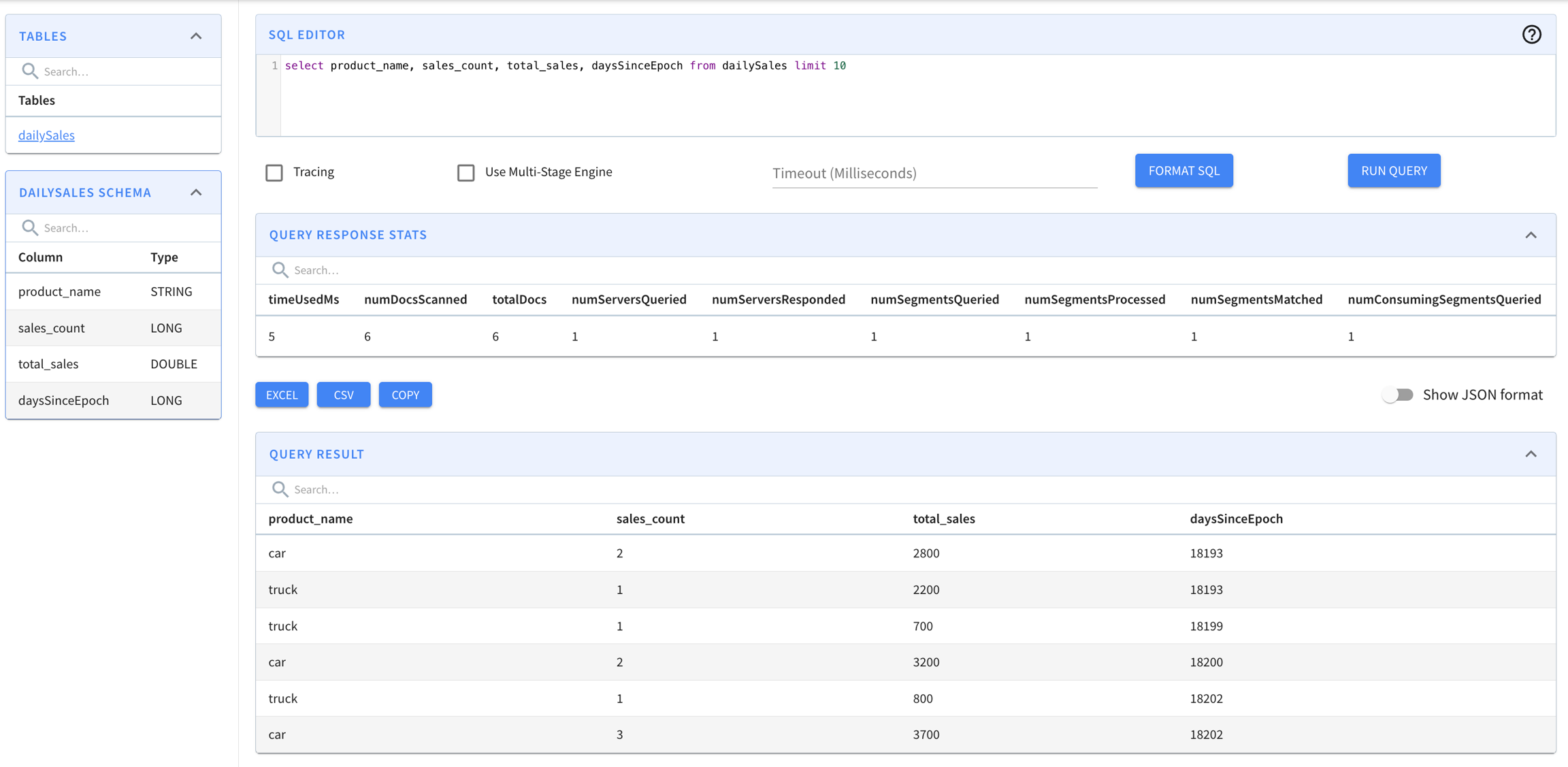

Example Scenario

Example Input Data

Schema

Table Config

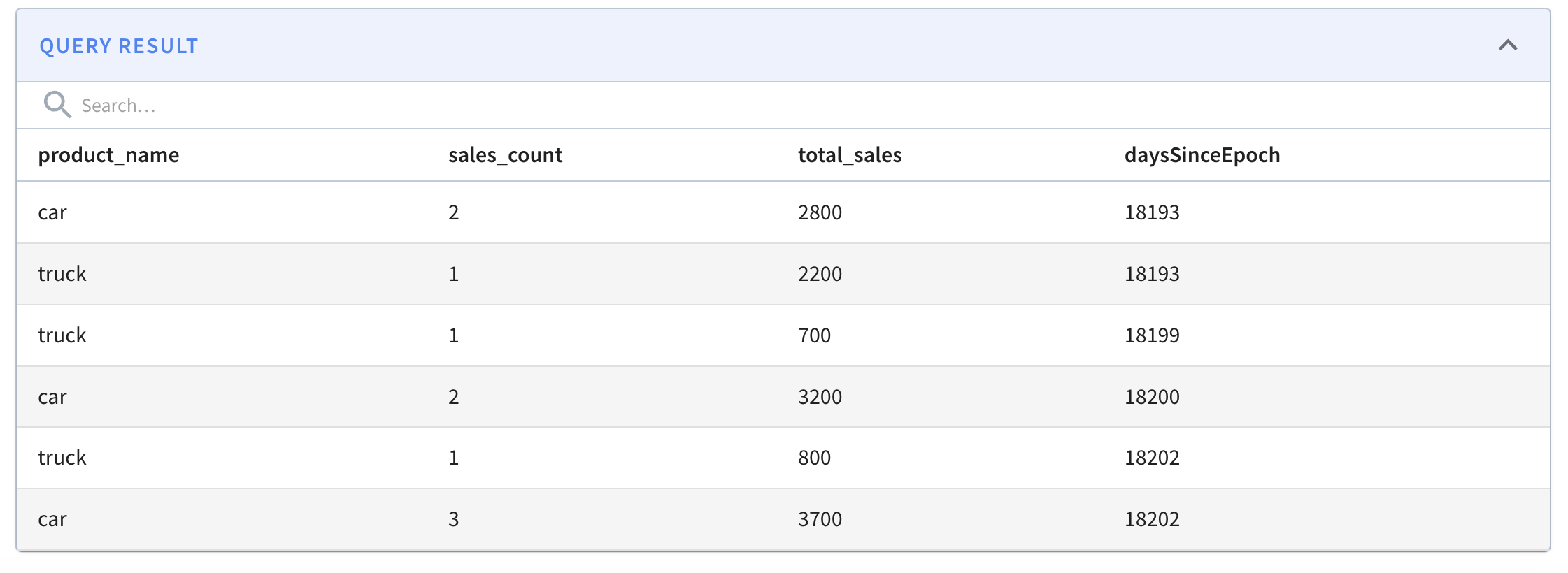

Example Final Table

product_name

sales_count

total_sales

daysSinceEpoch

Allowed Aggregation Functions

function name

notes

Frequently Asked Questions

Why not use a Startree?

When to not use ingestion aggregation?

I already use the aggregateMetrics setting?

aggregateMetrics setting?Does this config work for offline data?

Why do all metrics need to be aggregated?

Why no data show up when I enabled AggregationConfigs?

Was this helpful?