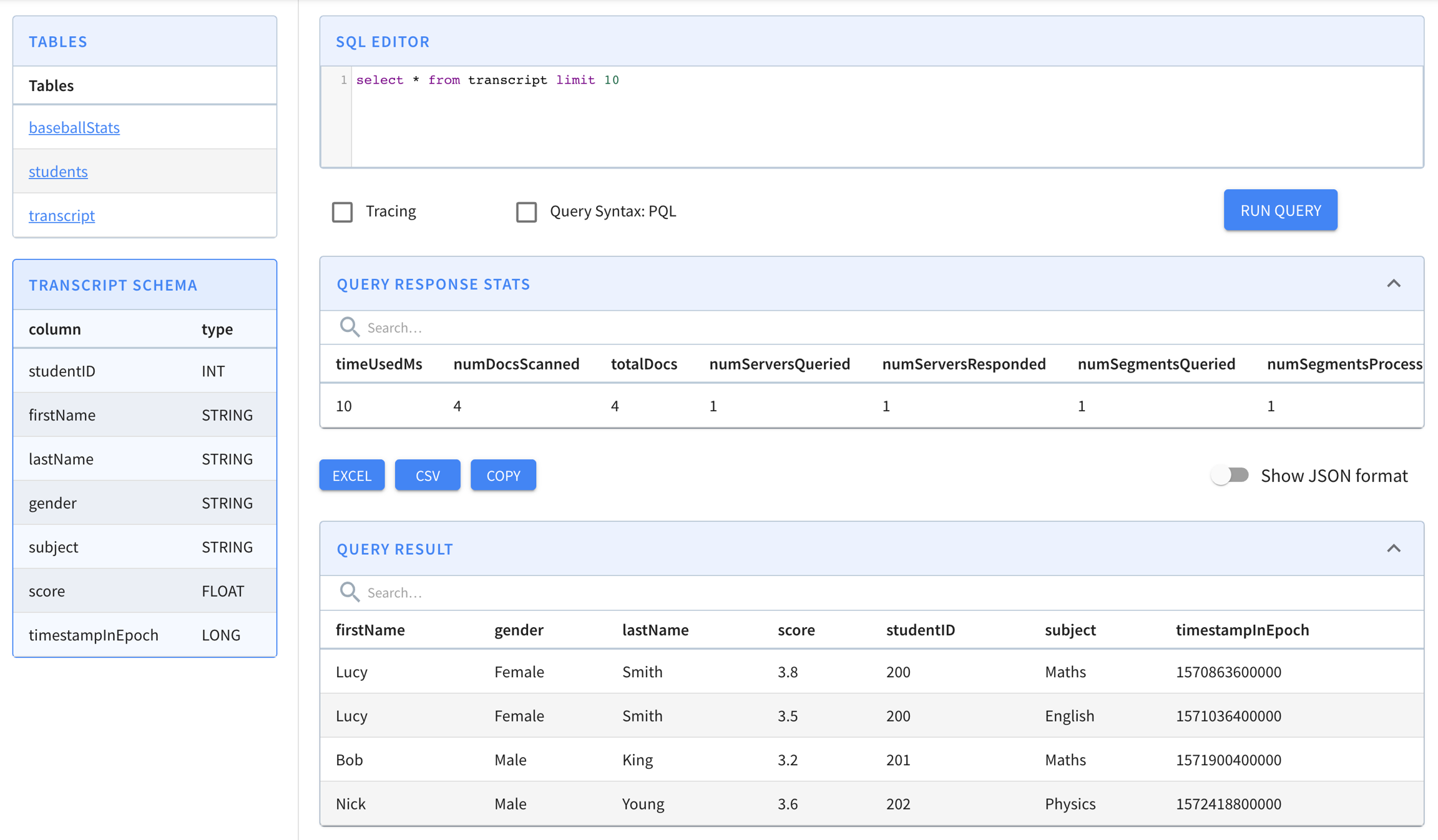

Stream ingestion example

The Docker instructions on this page are still WIP

Data Stream

docker run \

--network pinot-demo --name=kafka \

-e KAFKA_ZOOKEEPER_CONNECT=manual-zookeeper:2181/kafka \

-e KAFKA_BROKER_ID=0 \

-e KAFKA_ADVERTISED_HOST_NAME=kafka \

-d bitnami/kafka:latestdocker exec \

-t kafka \

/opt/kafka/bin/kafka-topics.sh \

--zookeeper manual-zookeeper:2181/kafka \

--partitions=1 --replication-factor=1 \

--create --topic transcript-topicbin/pinot-admin.sh StartKafka -zkAddress=localhost:2123/kafka -port 9876bin/kafka-topics.sh --create --bootstrap-server localhost:9876 --replication-factor 1 --partitions 1 --topic transcript-topicCreating a schema

Creating a table configuration

Uploading your schema and table configuration

Loading sample data into stream

Ingesting streaming data

Was this helpful?