Rebalance Servers

The rebalance operation is used to recompute the assignment of brokers or servers in the cluster. This is not a single command, but rather a series of steps that need to be taken.

In the case of servers, rebalance operation is used to balance the distribution of the segments amongst the servers being used by a Pinot table. This is typically done after capacity changes or config changes such as replication or segment assignment strategies or table migration to a different tenant.

Changes that require a rebalance

Below are changes that need to be followed by a rebalance.

Capacity changes

Increasing/decreasing replication for a table

Changing segment assignment for a table

Moving table from one tenant to a different tenant

Capacity changes

These are typically done when downsizing/uplifting a cluster or replacing nodes of a cluster.

Tenants and tags

Every server added to the Pinot cluster, has tags associated with it. A group of servers with the same tag forms a Server Tenant.

By default, a server in the cluster gets added to the DefaultTenant i.e. gets tagged as DefaultTenant_OFFLINE and DefaultTenant_REALTIME.

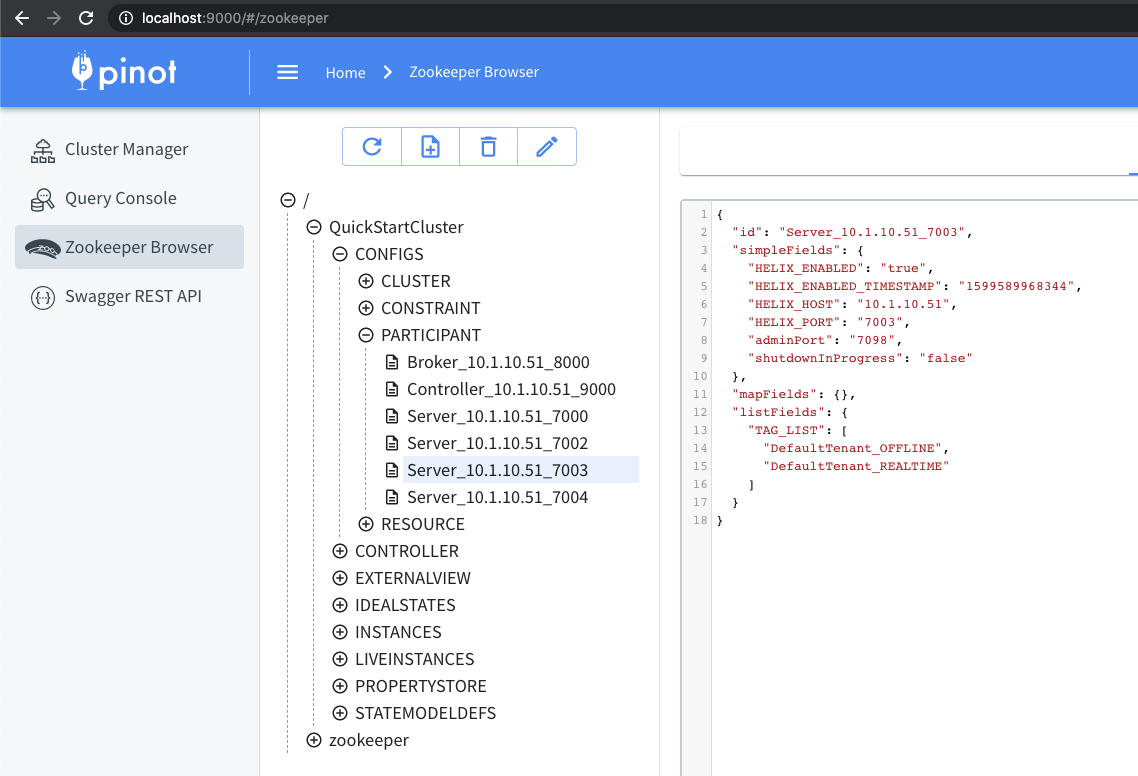

Below is an example of how this looks in the znode, as seen in ZooInspector.

A Pinot table config has a tenants section, to define the tenant to be used by the table. The Pinot table will use all the servers which belong to the tenant as described in this config. For more details about this, see the Tenants section.

Updating tags

0.6.0 onwards

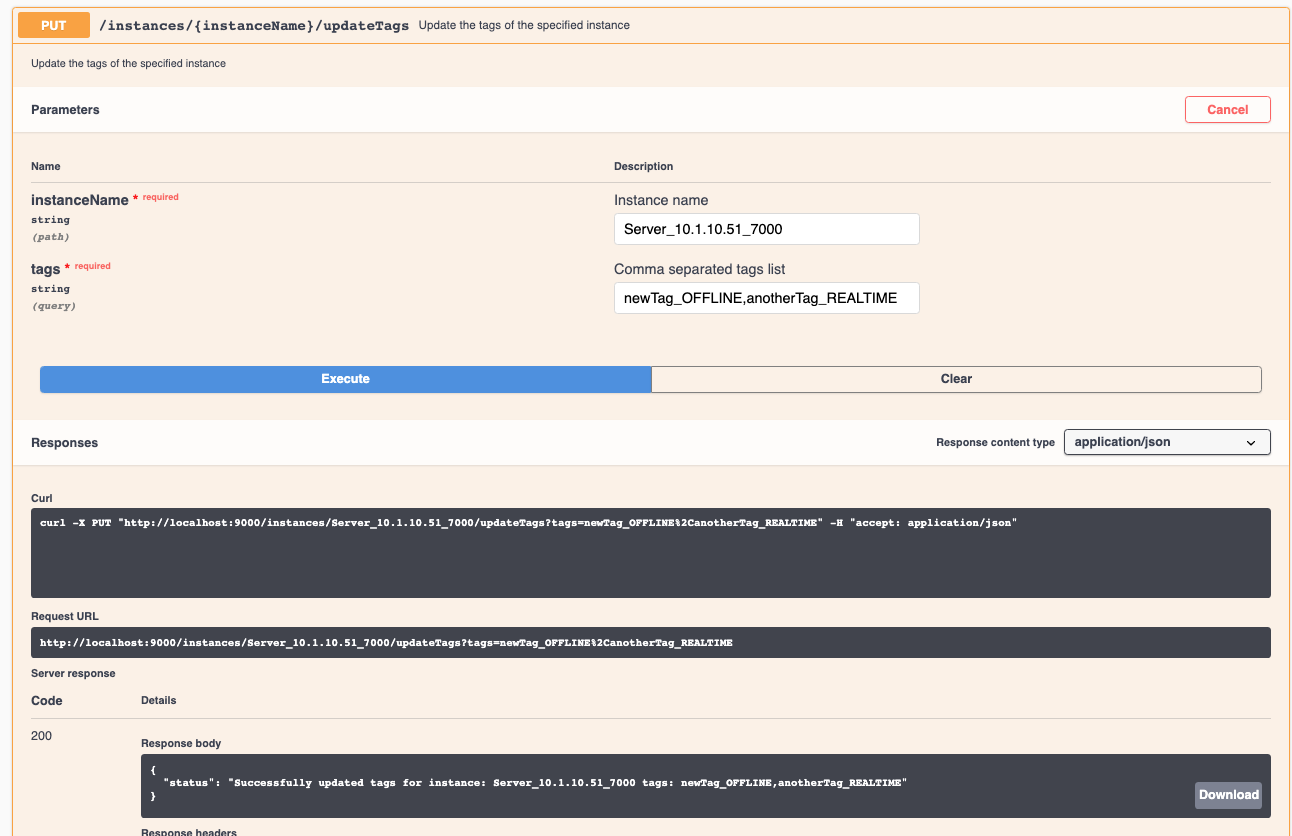

In order to change the server tags, the following API can be used.

PUT /instances/{instanceName}/updateTags?tags=<comma separated tags>

0.5.0 and prior

UpdateTags API is not available in 0.5.0 and prior. Instead, use this API to update the Instance.

PUT /instances/{instanceName}

For example,

NOTE

The output of GET and input of PUT don't match for this API. Make sure to use the right payload as shown in example above. Particularly, notice that instance name "Server_host_port" gets split up into their own fields in this PUT API.

When upsizing/downsizing a cluster, you will need to make sure that the host names of servers are consistent. You can do this by setting the following config parameter:

Replication changes

In order to change the replication factor of a table, update the table config as follows:

OFFLINE table - update the replication field

REALTIME table - update the replicasPerPartition field

Segment Assignment changes

The most common segment assignment change is moving from the default segment assignment to replica group segment assignment. Discussing the details of the segment assignment is beyond the scope of this page. More details can be found in Routing and in this FAQ question.

Table Migration to a different tenant

In a scenario where you need to move table across tenants, for e.g table was assigned earlier to a different Pinot tenant and now you want to move it to a separate one, then you need to call the rebalance API with reassignInstances set to true.

Rebalance Algorithms

Currently, two rebalance algorithms are supported; one is the default algorithm and the other one is minimal data movement algorithm.

The Default Algorithm

This algorithm is used for most of the cases. When reassignInstances parameter is set to true, the final lists of instance assignment will be re-computed, and the list of instances is sorted per partition per replica group. Whenever the table rebalance is run, segment assignment will respect the sequence in the sorted list and pick up the relevant instances.

Minimal Data Movement Algorithm

This algorithm focuses more on minimizing the data movement during table rebalance. When reassignInstances parameter is set to true and this algorithm gets enabled, the position of instances which are still alive remains the same, and vacant seats are filled with newly added instances or last instances in the existing alive instance candidate. So only the instances which change the position will involve in data movement.

In order to switch to this table rebalance algorithm, just simply set the following config to the table config before triggering table rebalance:

When instanceAssignmentConfigMap is not explicitly configured, minimizeDataMovement flag can also be set into the segmentsConfig:

Running a Rebalance

After any of the above described changes are done, a rebalance is needed to make those changes take effect.

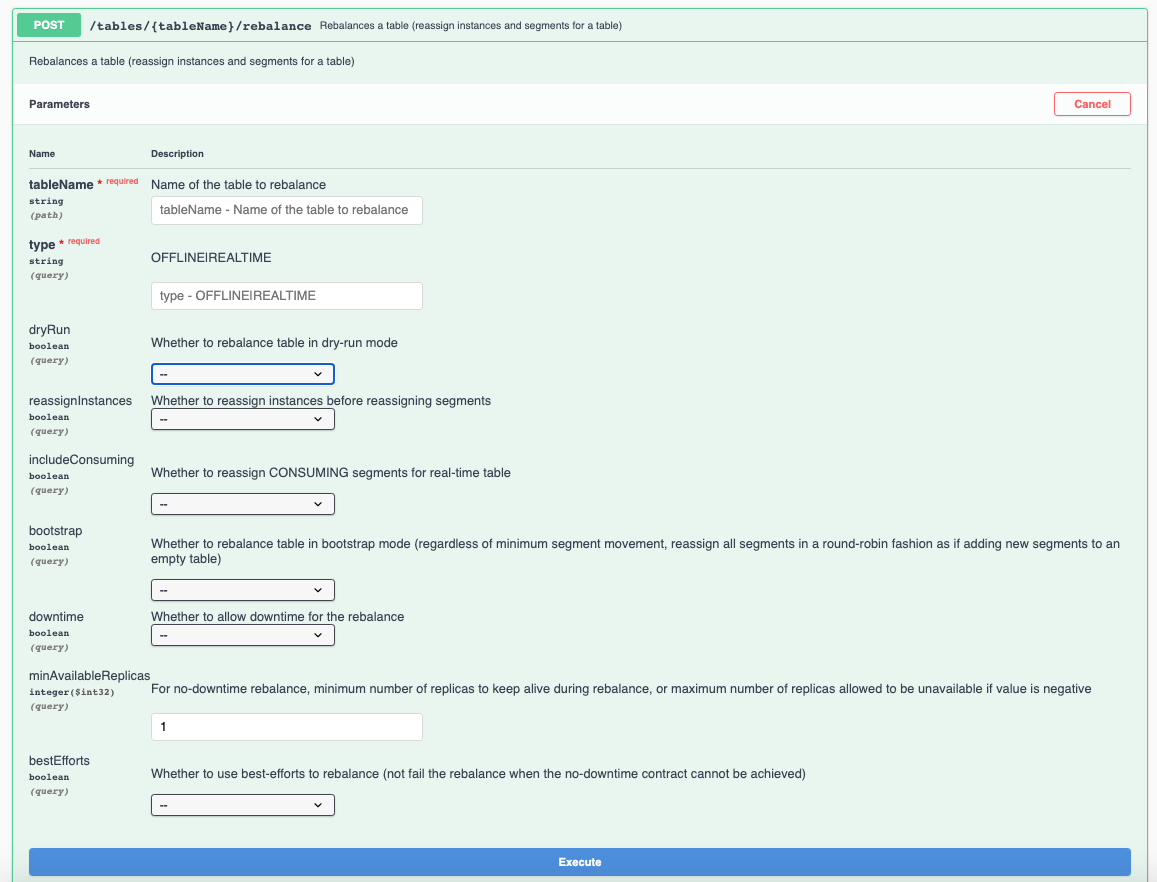

To run a rebalance, use the following API.

POST /tables/{tableName}/rebalance?type=<OFFLINE/REALTIME>

This API has a lot of parameters to control its behavior. Make sure to go over them and change the defaults as needed.

Note

Typically, the flags that need to be changed from defaults are

includeConsuming=true for REALTIME

downtime=true if you have only 1 replica, or prefer faster rebalance at the cost of a momentary downtime

dryRun

false

If set to true, rebalance is run as a dry-run so that you can see the expected changes to the ideal state and instance partition assignment.

includeConsuming

false

Applicable for REALTIME tables.

CONSUMING segments are rebalanced only if this is set to true. Moving a CONSUMING segment involves dropping the data consumed so far on old server, and re-consuming on the new server. If an application is sensitive to increased memory utilization due to re-consumption or to a momentary data staleness, they may choose to not include consuming in the rebalance. Whenever the CONSUMING segment completes, the completed segment will be assigned to the right instances, and the new CONSUMING segment will also be started on the correct instances. If you choose to includeConsuming=false and let the segments move later on, any downsized nodes need to remain untagged in the cluster, until the segment completion happens.

downtime

false

This controls whether Pinot allows downtime while rebalancing. If downtime = true, all replicas of a segment can be moved around in one go, which could result in a momentary downtime for that segment (time gap between ideal state updated to new servers and new servers downloading the segments). If downtime = false, Pinot will make sure to keep certain number of replicas (config in next row) always up. The rebalance will be done in multiple iterations under the hood, in order to fulfill this constraint.

Note: If you have only 1 replica for your table, rebalance with downtime=false is not possible.

minAvailableReplicas

1

Applicable for rebalance with downtime=false.

This is the minimum number of replicas that are expected to stay alive through the rebalance.

bestEfforts

false

Applicable for rebalance with downtime=false.

If a no-downtime rebalance cannot be performed successfully, this flag controls whether to fail the rebalance or do a best-effort rebalance.

reassignInstances

false

Applicable to tables where the instance assignment has been persisted to zookeeper. Setting this to true will make the rebalance first update the instance assignment, and then rebalance the segments.

bootstrap

false

Rebalances all segments again, as if adding segments to an empty table. If this is false, then the rebalance will try to minimize segment movements.

Checking status

The following API is used to check the progress of a rebalance Job. The API takes the jobId of the rebalance job. The API to see the jobIds of rebalance Jobs for a table is shown next.

Note that rebalanceStatus API is available from this commit

Below is the API to get the jobIds of rebalance jobs for a given table. The API takes the table name and jobType which is TABLE_REBALANCE.

Was this helpful?