Running in Kubernetes

Pinot quick start in Kubernetes

Prerequisites

Kubernetes

Pinot

# checkout pinot

git clone https://github.com/apache/pinot.git

cd pinot/helm/pinotSet up a Pinot cluster in Kubernetes

Start Pinot with Helm

Check Pinot deployment status

Load data into Pinot using Kafka

Bring up a Kafka cluster for real-time data ingestion

Check Kafka deployment status

Create Kafka topics

Load data into Kafka and create Pinot schema/tables

Query with the Pinot Data Explorer

Pinot Data Explorer

Query Pinot with Superset

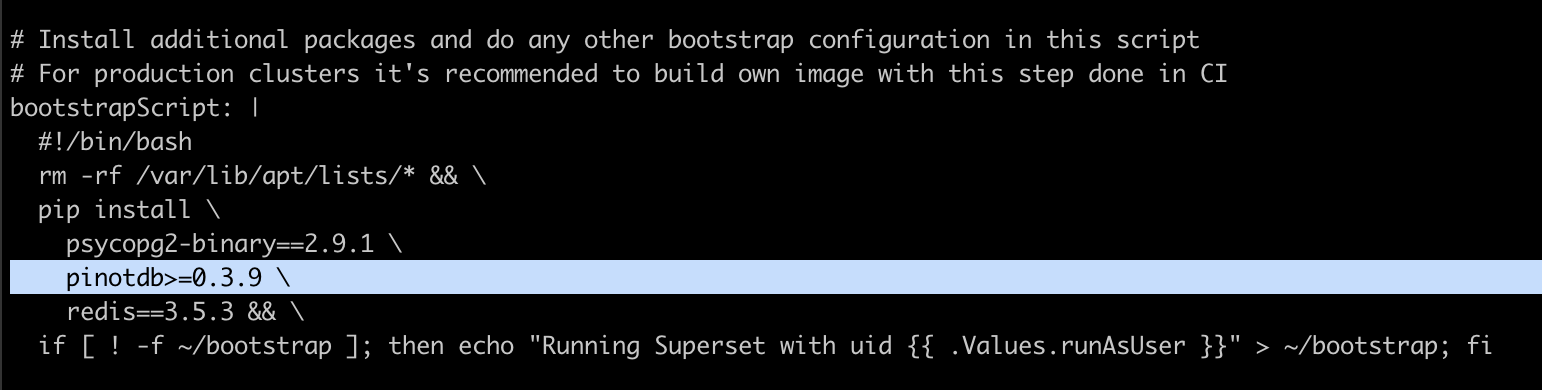

Bring up Superset using Helm

Access the Superset UI

Access Pinot with Trino

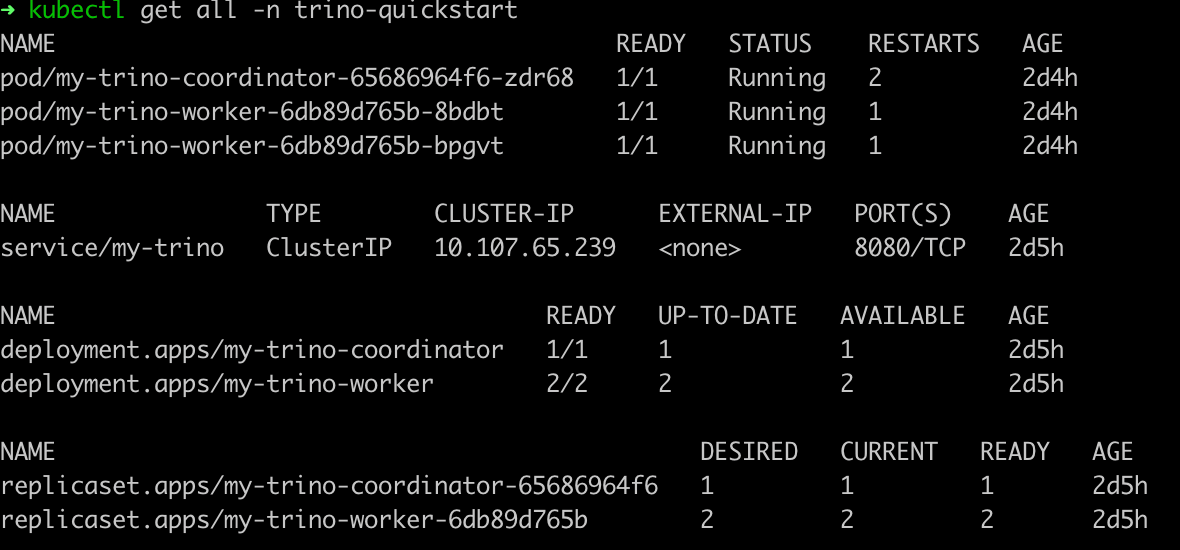

Deploy Trino

Query Pinot with the Trino CLI

Sample queries to execute

List all catalogs

List all tables

Show schema

Count total documents

Access Pinot with Presto

Deploy Presto with the Pinot plugin

Query Presto using the Presto CLI

Sample queries to execute

List all catalogs

List all tables

Show schema

Count total documents

Delete a Pinot cluster in Kubernetes

Last updated

Was this helpful?