$ curl -H "Content-Type: application/json" -X POST \

-d '{"sql":"select foo, count(*) from myTable group by foo limit 100"}' \

http://localhost:8099/query/sql$ curl -k -H "Content-Type: application/json" -X POST \

-d '{"sql":"select foo, count(*) from myTable group by foo limit 100"}' \

https://localhost:8099/query/sql$ curl -H "Content-Type: application/json" -X POST \

-d '{"sql":"select foo, count(*) from myTable where foo='"'"'abc'"'"' limit 100"}' \

http://localhost:8099/query/sql$ curl -H "Content-Type: application/json" -X POST \

-

$ curl -k -H "Content-Type: application/json" -X POST \

$ curl -H "Content-Type: application/json" -X POST \

-

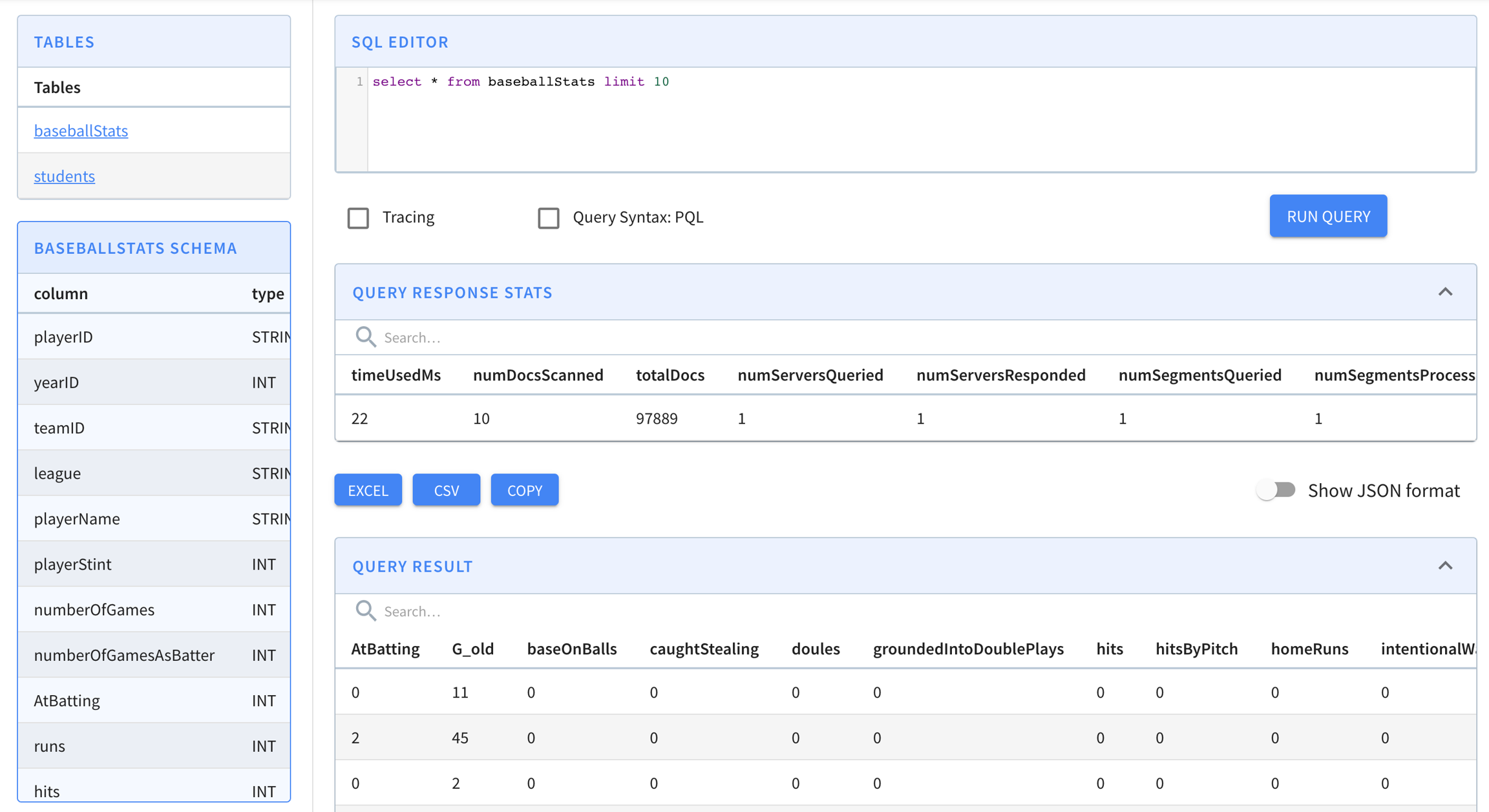

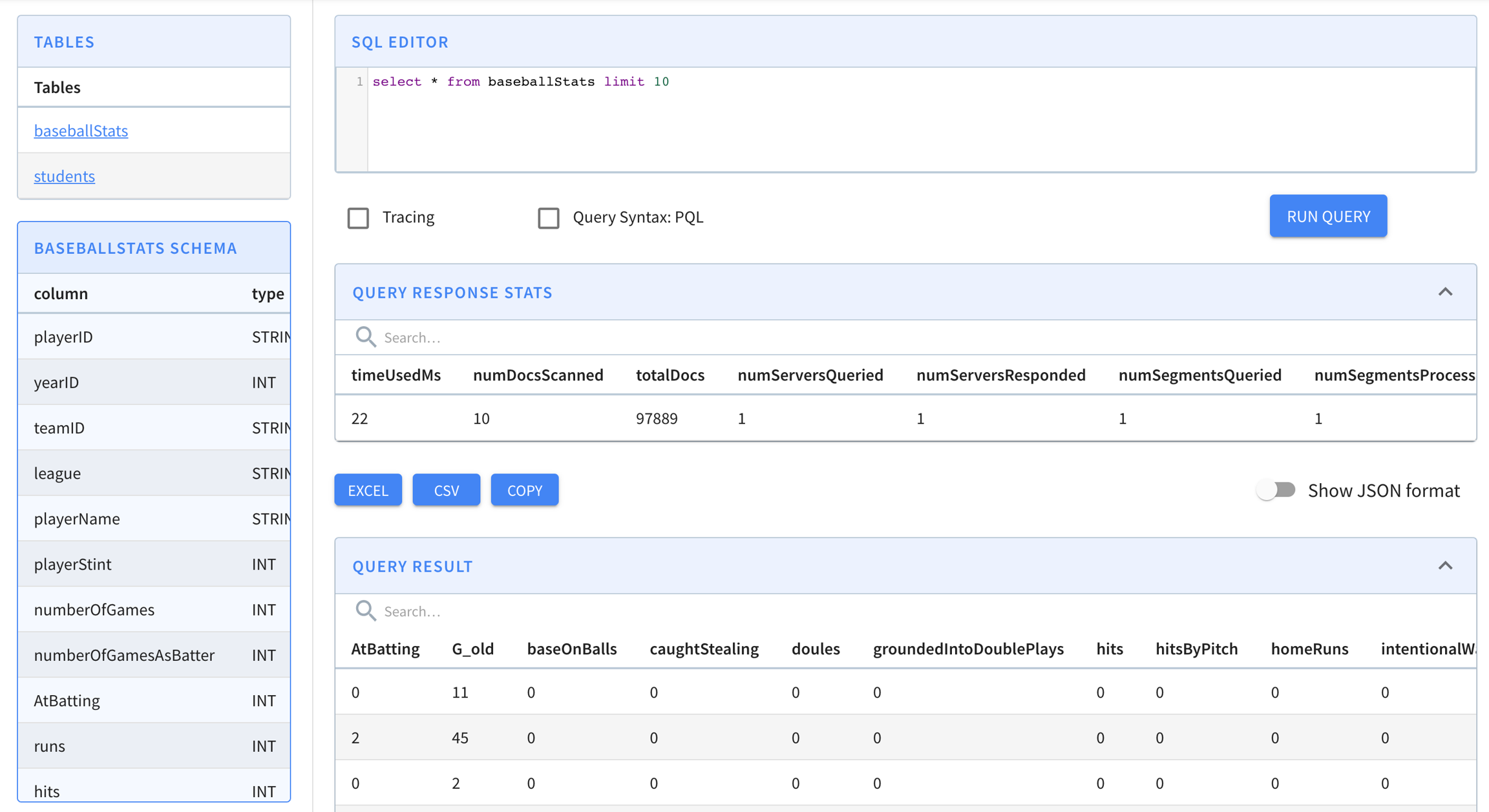

cd incubator-pinot/pinot-tools/target/pinot-tools-pkg

bin/pinot-admin.sh PostQuery \

-queryType sql \

-brokerPort 8000 \

-query "select count(*) from baseballStats"

2020/03/04 12:46:33.459 INFO [PostQueryCommand] [main] Executing command: PostQuery -brokerHost localhost -brokerPort 8000 -queryType sql -query select count(*) from baseballStats

2020/03/04 12:46:33.854 INFO [PostQueryCommand] [main] Result: {"resultTable":{"dataSchema":{"columnDataTypes":["LONG"],"columnNames":["count(*)"]},"rows":[[97889]]},"exceptions":[],"numServersQueried":1,"numServersResponded":1,"numSegmentsQueried":1,"numSegmentsProcessed":1,"numSegmentsMatched":1,"numConsumingSegmentsQueried":0,"numDocsScanned":97889,"numEntriesScannedInFilter":0,"numEntriesScannedPostFilter":0,"numGroupsLimitReached":false,"totalDocs":97889,"timeUsedMs":185,"segmentStatistics":[],"traceInfo":{},"minConsumingFreshnessTimeMs":0}$ curl -X POST \

-d '{"sql":"SELECT SUM(moo), MAX(bar), COUNT(*) FROM myTable"}' \

localhost:8099/query/sql -H "Content-Type: application/json"

{

"exceptions": [],

"minConsumingFreshnessTimeMs": 0,

"numConsumingSegmentsQueried": 0,

"numDocsScanned": 6,

"numEntriesScannedInFilter": 0,

"numEntriesScannedPostFilter": 12,

"numGroupsLimitReached": false,

"numSegmentsMatched": 2,

"numSegmentsProcessed": 2,

"numSegmentsQueried": 2,

"numServersQueried": 1,

"numServersResponded": 1,

"resultTable": {

"dataSchema": {

"columnDataTypes": [

"DOUBLE",

"DOUBLE",

"LONG"

],

"columnNames": [

"sum(moo)",

"max(bar)",

"count(*)"

]

},

"rows": [

[

62335,

2019.0,

6

]

]

},

"segmentStatistics": [],

"timeUsedMs": 87,

"totalDocs": 6,

"traceInfo": {}

}$ curl -X POST \

-d '{"sql":"SELECT SUM(moo), MAX(bar) FROM myTable GROUP BY foo ORDER BY foo"}' \

localhost:8099/query/sql -H "Content-Type: application/json"

{

"exceptions": [],

"minConsumingFreshnessTimeMs": 0,

"numConsumingSegmentsQueried": 0,

"numDocsScanned": 6,

"numEntriesScannedInFilter": 0,

"numEntriesScannedPostFilter": 18,

"numGroupsLimitReached": false,

"numSegmentsMatched": 2,

"numSegmentsProcessed": 2,

"numSegmentsQueried": 2,

"numServersQueried": 1,

"numServersResponded": 1,

"resultTable": {

"dataSchema": {

"columnDataTypes": [

"STRING",

"DOUBLE",

"DOUBLE"

],

"columnNames": [

"foo",

"sum(moo)",

"max(bar)"

]

},

"rows": [

[

"abc",

560.0,

2008.0

],

[

"pqr",

21760.0,

2010.0

],

[

"xyz",

40015.0,

2019.0

]

]

},

"segmentStatistics": [],

"timeUsedMs": 15,

"totalDocs": 6,

"traceInfo": {}

}curl -X POST \

-d '{"pql":"select * from flights limit 3"}' \

http://localhost:8099/query

{

"selectionResults":{

"columns":[

"Cancelled",

"Carrier",

"DaysSinceEpoch",

"Delayed",

"Dest",

"DivAirports",

"Diverted",

"Month",

"Origin",

"Year"

],

"results":[

[

"0",

"AA",

"16130",

"0",

"SFO",

[],

"0",

"3",

"LAX",

"2014"

],

[

"0",

"AA",

"16130",

"0",

"LAX",

[],

"0",

"3",

"SFO",

"2014"

],

[

"0",

"AA",

"16130",

"0",

"SFO",

[],

"0",

"3",

"LAX",

"2014"

]

]

},

"traceInfo":{},

"numDocsScanned":3,

"aggregationResults":[],

"timeUsedMs":10,

"segmentStatistics":[],

"exceptions":[],

"totalDocs":102

}curl -X POST \

-d '{"pql":"select count(*) from flights"}' \

http://localhost:8099/query

{

"traceInfo":{},

"numDocsScanned":17,

"aggregationResults":[

{

"function":"count_star",

"value":"17"

}

],

"timeUsedMs":27,

"segmentStatistics":[],

"exceptions":[],

"totalDocs":17

}curl -X POST \

-d '{"pql":"select count(*) from flights group by Carrier"}' \

http://localhost:8099/query

{

"traceInfo":{},

"numDocsScanned":23,

"aggregationResults":[

{

"groupByResult":[

{

"value":"10",

"group":["AA"]

},

{

"value":"9",

"group":["VX"]

},

{

"value":"4",

"group":["WN"]

}

],

"function":"count_star",

"groupByColumns":["Carrier"]

}

],

"timeUsedMs":47,

"segmentStatistics":[],

"exceptions":[],

"totalDocs":23

}